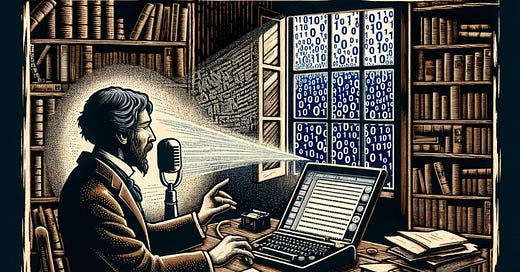

I finally got around to using my new dictation software, StorySpeak AI. The "AI" bit on the end sort of gives it away, dunnit? I don’t think this program is doing what the old Dragon Dictate or Siri or any of the first-gen, second-gen programs were doing. Partly, I say that because it is so scary accurate.

I was pretty excited when I found out that Microsoft had bought the license to Dragon’s core software—or perhaps just bought the core software outright. I don’t know. But either way, it promised a reprieve from Siri, which as a dictation solution is a garbage fire. I don’t find Google’s dictation offering much better. Anyway, long story short, I’ve been using this thing all day, grinding out huge slabs of text that I then cut down into something readable.

It’s been a challenge. I remember how hard it was trying to learn how to use Dragon Dictate ten or fifteen years ago, after I broke my arm when writing one of The dave books, I think. Everything felt hard; everything was a grind. Eventually, I got pretty fluent. I learned to dictate my formatting and quotation marks and so on, but that took a long time.

It feels like I’m learning everything all over again. Weirdly, the problem arises because StorySpeak does a lot of the formatting itself.

So, I have to unlearn about a decade and a half of deeply ingrained habits when speaking into a microphone to tell a story.

The bigger challenge, however, is that you don’t see the text emerging on the screen as you talk. That was always the most science fiction element of dictation for me. I used to love the way the words would just spill across the screen as I spoke them. You don’t get that with StorySpeak. You dictate the entire block of text, and then you transfer that audio file to the web page. It sucks up the data, runs its analysis, and sometime later, it spits out the finished words.

That’s where the magic happens because the text—the transcription—is way more accurate than I’ve been able to get out of Microsoft or Apple or Google. I guess that as they integrate more large language models into their own software, their dictation will get better. But for the moment, this thing is crushing them.

I do find it really, really difficult, however, to dictate without being able to see the text scrolling across the page. It’s like I lose track of where I am in the story or something. It feels hard, like really, really hard. I don’t know whether it will get easier as I go on. I suspect so, but for now, it’s difficult. However, it is so accurate that I’m willing to muscle my way through.

And before Elana asks, yes, it's fine with Australian accents.

That's almost certainly a startup-mode UI glitch. It'll be running locally and blatting text up on the screen before the next subscription cheque is due.

Where you'll really freak out though will be when it starts to run ahead of you, like predictive spelling correction does now. You'll question whether it's still you making up the story...